Sophia Holland

Data Engineer

Applications of Explainable Artificial Intelligence

Explainable Artificial Intelligence —also known as XAI (Explainable Artificial Intelligence)— is revolutionizing the way we use AI across various sectors. Unlike traditional systems that operate as black boxes, XAI allows us to understand how and why a model makes a decision. Did the AI reject a loan? Did it detect a cybersecurity threat? Did it suggest a medical treatment? With XAI, it is now possible to explain those decisions clearly, reliably, and usefully.

In this article, we will show you the main applications of explainable AI in the real world. From healthcare to agriculture, education, finance, and the environment, each sector is leveraging this technology to make safer, more ethical, and understandable decisions.

If you are looking for concrete examples of XAI or want to understand how explainable AI is used in practice, keep reading: this is your starting point to understand how transparency in artificial intelligence is changing the rules of the game.

The main applications of XAI today are:

-

Environment and Energy

- Classification of jobs in green vs. traditional industries.

- Energy models (grid stability, energy prediction).

- Evaluation of climate models.

- Analysis of environmental factors contributing to wildfires.

-

Education

- Educational tools with explainable feedback (e.g., RiPPLE, FUMA, AcaWriter).

- Transparency in learning management systems (LMS).

- Improvement of personalized learning.

- Determination of study interests for curriculum design.

-

Social Media

- Explainable detection of hate speech.

- Detection of misinformation across social platforms.

- Explanations with social context (influence of surroundings, diffusion metrics).

-

Cybersecurity

- Explainable detection of malware.

- Intruder and anomaly detection in networks.

- Interpretative rules for classification in cybersecurity.

-

Finance

- Credit risk assessment.

- Financial fraud detection.

- Integration with blockchain for traceability.

- Peer-to-peer (P2P) loan models with transparent decisions.

-

Law

- Support for judicial decisions and contract analysis.

- Evaluation of AI-generated evidence.

- Justification of decisions in legal processes.

-

Agriculture

- Explainable crop recommendation systems.

- Analysis of agricultural conditions (soil, climate).

- Support for precision and sustainable agriculture.

-

Health

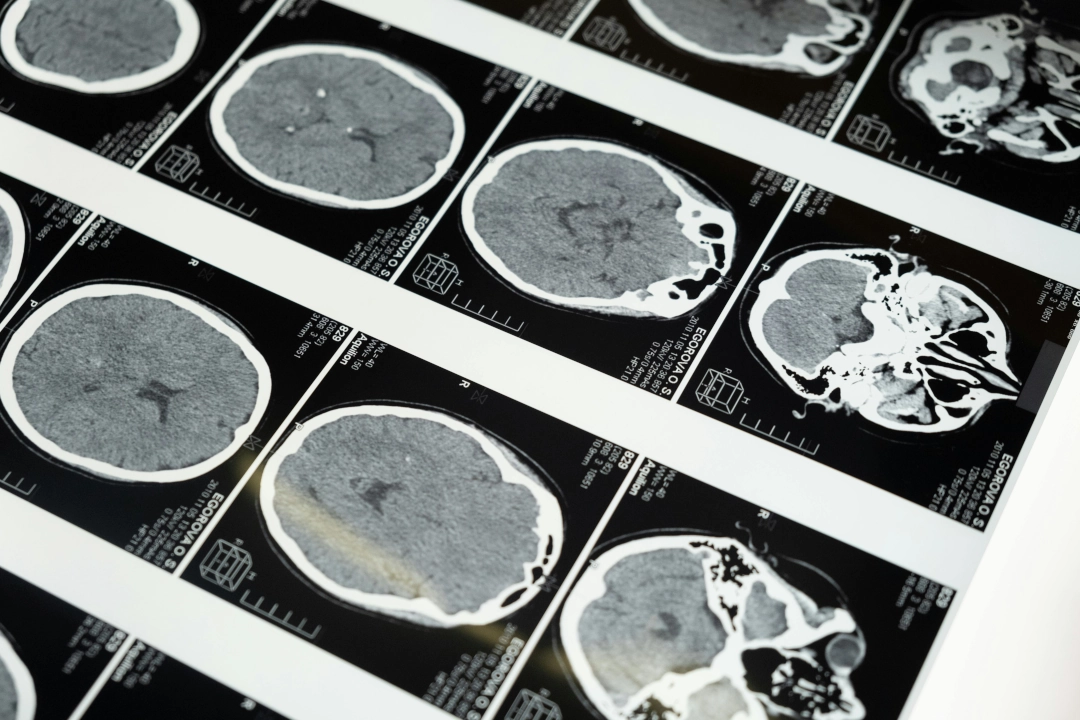

- Medical diagnosis (using techniques like SHAP, LIME, Grad-CAM).

- Precision medicine with interpretable models.

- Counterfactual explanations in clinics.

- Applications in emergency medicine (triage, critical decisions).

- Attention mapping and saliency in medical images.

- Secure collaboration through federated learning.

Environment and Energy: Towards a More Transparent Transition

Explainable AI (XAI) is playing a key role in the green transformation. For example, by classifying job offers as "green" or "traditional", XAI helps policymakers understand how the job market evolves in response to climate change. Additionally, in wildfire prediction, techniques like SHAP allow us to identify factors—such as soil temperature or solar radiation—that increase risk in different regions. This makes the models strategic tools for environmental prevention.

Education: Explanations that Drive Learning

In the classroom, it is not enough for a system to simply tell a student what to study: it is essential to know why it recommends it. XAI allows for understandable feedback to both students and teachers, based on real data. Tools like RiPPLE and AcaWriter already use AI-generated explanations to improve the educational process. The result is a more personalized experience, with pedagogical decisions supported by logic and transparency.

Social Media: Moderation with Grounding

Have you ever had a post removed without knowing why? XAI aims to solve such situations by offering clear explanations for decisions such as detecting hate speech or misinformation. Interpretable models, supported by tools like LIME, help us understand which elements of the content triggered an alert. Moreover, by incorporating social data—such as interaction levels or diffusion metrics—we can achieve a deeper analysis of why certain posts go viral or are considered dangerous.

Cybersecurity: Detecting Threats with Clarity

In the digital world, identifying malicious behaviors is vital. But just as important is knowing why a threat was detected. With XAI, malware and intrusion detection models not only alert us but also explain why they detected something. Tools like SHAP and decision trees allow security teams to see what patterns triggered the detection, enabling faster responses and minimizing false positives that could cost time and money.

Smart Agriculture: When the Field Understands AI

In modern agriculture, AI recommends what to plant, when, and where. But for farmers to trust these suggestions, they need to know where they come from. XAI allows us to understand how variables such as soil type, weather, or humidity influence each decision. This not only improves yield and sustainability but also turns AI into a real, useful, and accessible ally for those who work the land.