James Johnson

Data Engineer

Artificial Intelligence: Explainability and Interpretability in Machine Learning

1. Introduction to Modern AI

Artificial Intelligence (AI) has gained significant popularity in recent years. The increase in computing power and the ability to store vast amounts of data has allowed us to train machine learning models (which have been known for a long time) with high accuracy, and we have obtained a series of results that have enabled us to understand reality through the data we generate.

As we have overcome many of these challenges in the field of AI, new ones have emerged that we must face. The very name of artificial intelligence suggests that we can take this debate beyond computing and into the realm of philosophy. It is to be expected that many of these new challenges will have an existential component that does not allow us to make as categorical statements as we might in other fields.

2. The Problem of Reasoning in AI Systems

In this post, we will not delve into what artificial intelligence is, but we will address two concepts that, as previously mentioned, brush against the field of philosophy. Once we have trained a machine learning model, we need to figure out how it predicts, classifies, generates, etc. But we often forget a fundamental aspect to be more confident in the decisions being made, which answers the question, "How did we arrive at this conclusion?" When we make a prediction with our AI model, we know the result, but not the reasoning.

To understand, we must individually address the machine learning model we are using. It is not the same to use a linear regression model as it is to use a decision tree or neural networks (with a higher number of parameters and "neurons" or vertices).

3. Practical Example: Decision Tree in Machine Learning

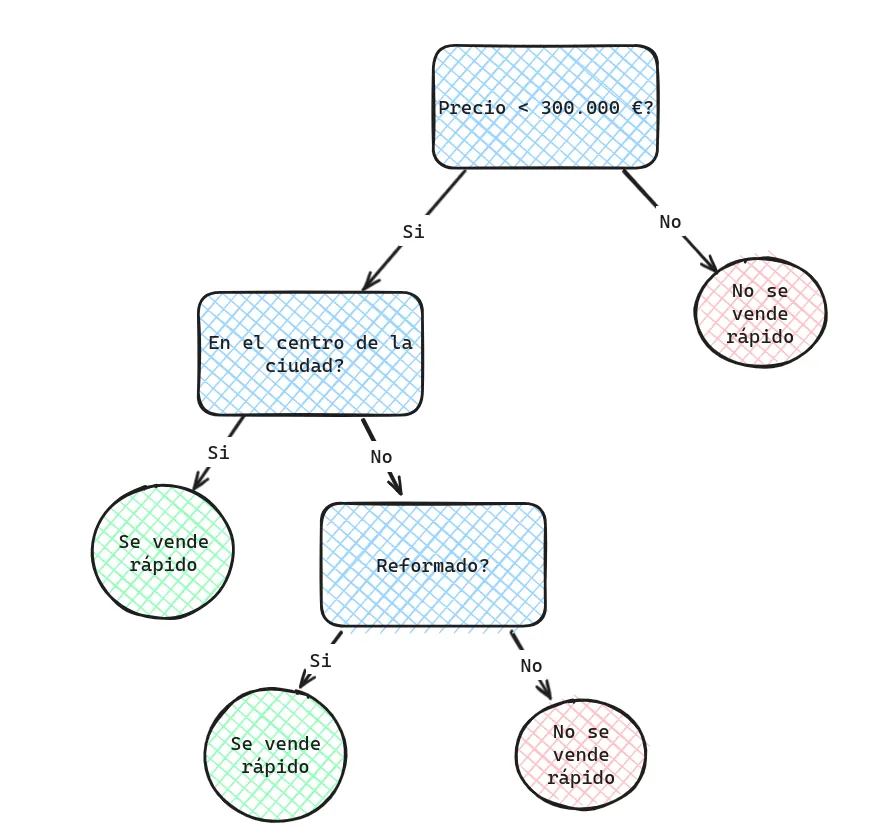

We can more or less intuitively understand how a conclusion has been reached when using a decision tree. For example, let's suppose we are a real estate agency and need a quick and automated method to tell if an apartment will sell quickly or not in order to adopt one strategy or another. To do this, we train a very simple AI model, and the final result is shown in the following image.

We would agree that if an apartment worth €450,000 is predicted as being difficult to sell, we will directly understand the reasoning behind it: its price is higher than €300,000. If we then have an apartment worth €150,000, not renovated but in the city center, we can also immediately understand why our model categorized it as a quick sale: its price is under €300,000 and it's located in the center.

4. Interpretability vs Explainability in Artificial Intelligence

We might need a more technical foundation in mathematics to understand how a linear regression model works. However, when using a neural network model with a large number of vertices and edges, we lose the traceability of the decision made, no matter how well we understand the model. It seems that there is an intrinsic property in machine learning models that allows us to understand the reasoning behind them. This is where the concepts of interpretability and explainability in AI come into play.

4.1 Interpretability in Machine Learning

We could say that all AI models, by nature, have a certain degree of interpretability. We can think of this as an internal property that shows how difficult it is to understand the "reasoning" followed by the model to reach a conclusion. As we saw in the previous image, it is very easy to understand the reasoning behind a decision tree (although as the number of nodes and edges increases, it becomes more difficult, but we can still trace it). The same happens with a propositional rule engine in AI: we can easily discern each "decision" made because we follow the same reasoning as the model without much difficulty.

Even for a linear regression model in machine learning, we can easily understand how a conclusion has been reached (although it may be more difficult to understand how the hyperparameters of the model were obtained). For example, if we are told that the variable Y is predicted as follows: Y = aX + b, we will know that this formula was used to predict the variable (the tricky part here is figuring out how to obtain the hyperparameters a and b to optimize our model based on the data). It gets complicated when working with a neural network model with 3 hidden layers and 7 nodes in each layer. At this point, it becomes impossible to know the traceability followed by the model to understand why it made its decision, and in this case, we would say that the AI model is difficult to interpret.

4.2 Explainability in AI

This is how we can determine a level of understanding that arises from each model, but what happens with machine learning models that are very difficult to interpret? In that case, we can try using external tools to shed some light on the decisions of our artificial intelligence. The set of results that these tools provide us can be classified as explainability in AI. In other words, the explainability of a model is achieved through techniques external to the model that indicate how the model "reasoned" to make a decision. In the previously mentioned case, we could use the Shapley value (commonly used in game theory, which gained momentum due to Von Neumann's intervention) to weigh and correlate the various variables involved in our machine learning model.

5. Conclusion on Explainability and Interpretability in AI

In conclusion, we could say that every artificial intelligence model has a certain degree of interpretability, and we can apply techniques to any model that provide us with explainability. It is up to us to determine when to use one method and when to try to reason through the other. It might not make sense to apply a LIME model to offer explainability for a K-nearest neighbors algorithm, since we can almost intuitively determine how a value is predicted in this machine learning model. Explainability in AI becomes crucial when we implement artificial intelligence systems in environments where decisions have a significant impact on people's lives.